set_cuda_backend¶

- torchcodec.decoders.set_cuda_backend(backend: str) Generator[None, None, None][source]¶

Context Manager to set the CUDA backend for

VideoDecoder.This context manager allows you to specify which CUDA backend implementation to use when creating

VideoDecoderinstances with CUDA devices.Note

We recommend trying the “beta” backend instead of the default “ffmpeg” backend! The beta backend is faster, and will eventually become the default in future versions. It may have rough edges that we’ll polish over time, but it’s already quite stable and ready for adoption. Let us know what you think!

Only the creation of the decoder needs to be inside the context manager, the decoding methods can be called outside of it. You still need to pass

device="cuda"when creating theVideoDecoderinstance. If a CUDA device isn’t specified, this context manager will have no effect. See example below.This is thread-safe and async-safe.

- Parameters:

backend (str) – The CUDA backend to use. Can be “ffmpeg” (default) or “beta”. We recommend trying “beta” as it’s faster!

Example

>>> with set_cuda_backend("beta"): ... decoder = VideoDecoder("video.mp4", device="cuda") ... ... # Only the decoder creation needs to be part of the context manager. ... # Decoder will now the beta CUDA implementation: ... decoder.get_frame_at(0)

Examples using

set_cuda_backend:

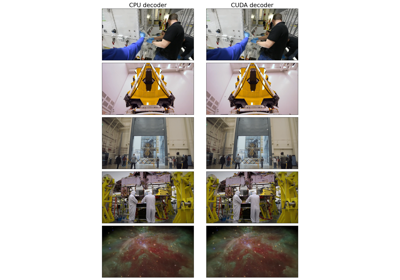

Accelerated video decoding on GPUs with CUDA and NVDEC

Accelerated video decoding on GPUs with CUDA and NVDEC